Artificial intelligence is revolutionising banking, optimising services and enriching the customer experience. However, this transformation comes with major regulatory challenges. The Artificial Intelligence Regulation (AI Act), adopted on 13 June 2024, establishes a harmonised legal framework within the European Union for the use of AI, particularly in sensitive sectors such as banking.

The AI Act marks a major step forward. It provides the European Union with a comprehensive legal framework for artificial intelligence. This legislation comes at a time when AI is becoming essential in the banking sector, influencing customer relations, risk management and financial decisions.

For European banks, this regulation goes beyond mere technological regulation. It poses a strategic challenge: combining technological innovation with compliance with constantly changing regulations.

The growing adoption of AI in banks

From chatbots from the automation of credit processes to fraud detection, AI is emerging as a key tool for transforming banking practices.

Its adoption is accelerating rapidly. A McKinsey study in 2023 forecasts growth of 30 % per year between now and 2026. The biggest increase in its use (+45 %) will be in customer services.

Banks are investing massively in AI. In 2024, they invested more than €150 billion, or 13 % of global investment in AI. This trend can be explained by the many advantages that AI promises banks:

● Process automation

AI automates tedious and repetitive tasks, such as processing loan applications, detecting fraud and checking documents. This enables banks to improve operational efficiency, reduce costs and free up their employees for higher value-added tasks.

For example, BNP Paribas has reduced the time it takes to process loan applications by 80 % thanks to AI.

● Improving the customer experience

AI personalizes banking services and enables 24/7 customer support. AI-powered chatbots answer questions, provide personalised financial advice and help manage accounts. This improves customer satisfaction and loyalty.

ING Belgium has rolled out "Ida", a virtual assistant which processes more than 70 % of customer requests without human intervention. According to Xerfi, banks should increase their annual revenues by 3 to 5 % by 2025 thanks to AI.

● Risk management

AI helps banks to proactively identify and manage risk. It detects fraud, assesses the creditworthiness of borrowers, forecasts credit losses and monitors suspicious transactions. This reduces financial risk.

Crédit Mutuel, for example, has reduced its losses from bank card fraud by 30 % thanks to AI. For its part, Crédit Agricole is using AI for thepredictive analysis credit risks, reducing the default rate by 23 % on certain customer segments.

The AI Act: a risk-based approach

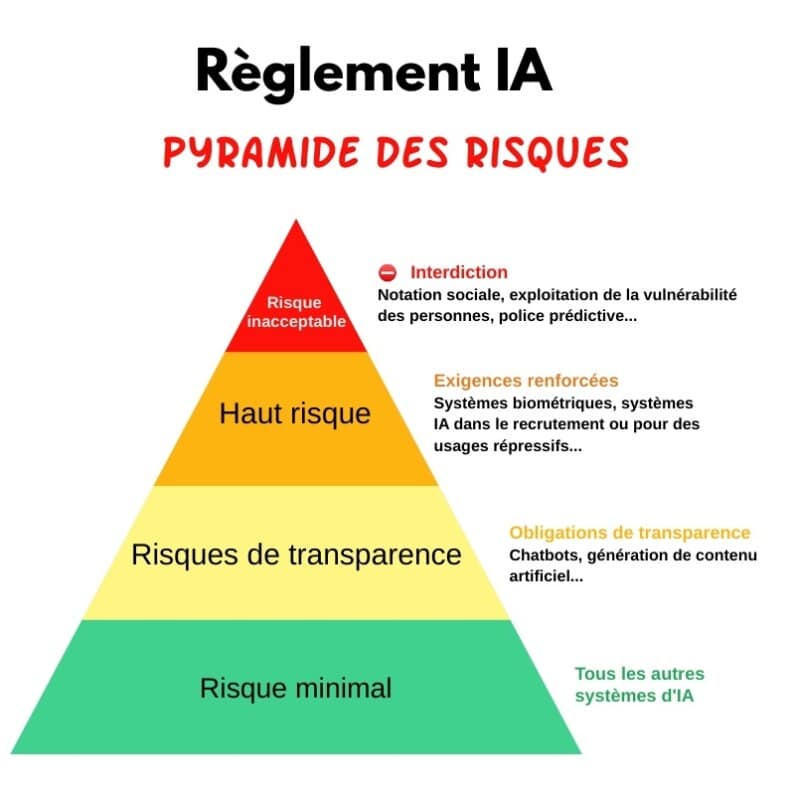

Against this backdrop, the AI Regulation introduces a harmonised legal framework to support the growing use of AI while rigorously framing the risks associated with its deployment.

In particular, this regulation stands out for its risk-based approach. It prioritises obligations according to the potential for harm from AI systems.

©Alexandre Salque/ORSYS

This model is particularly suited to the banking sector. Risk regulation is a fundamental principle, enshrined in the CRD (prudential framework for credit institutions) and other sectoral standards.

The inclusion of the AI Regulation therefore complements existing obligations by providing a framework for the risks associated with individuals' fundamental rights.

Classification of AI systems: a key issue for banks

One of the major contributions of the AI regulation lies in the classification of AI systems according to their level of risk, ranging from those deemed "unacceptable" to those deemed "low risk.

For banking institutions, the importance of this classification is obvious, since several common uses of AI, such as theredit scoring models and customer profiling systems are now considered "high-risk" .

Banks will thus be subject to enhanced obligations, particularly in terms of risk management, transparency and accountability for decisions taken by AI systems. These requirements include, among others, the implementation of governance and the obligation to ensure full traceability of automated decisions.

While this approach strengthens the protection of fundamental rights, it also requires financial institutions to review their internal processes to ensure compliance, in particular with the Charter of Fundamental Rights of the European Union and the General Data Protection Regulation (RGPD).

Convergence with prudential regulations: a challenge for banks

One of the special features of the AI Regulation is that it is consistent with other European legislation, particularly that governing the banking sector.

For example, the CRD, which imposes requirements on the governance of financial institutions, could in part be seen as fulfilling certain obligations under the IA Regulation, particularly in relation to risk management and internal control.

However, although adjustments have been considered to avoid overlaps between the requirements of the IA Act and those of the banking legislation, uncertainties remain as to how these two regulatory frameworks will coordinate in practice.

And so, some banks could find themselves facing a dual challenge: ensuring compliance with a set of prudential standards while meeting the specific obligations imposed by the AI Regulation.

The risk of duplication or contradiction in standards must be managed, otherwise additional compliance costs will be incurred and technological innovation will be slowed down.

The need for transparency and accountability

The AI regulation imposes particularly strict requirements transparency and accountability, particularly for so-called "high-risk" AI systems. Banking institutions will now have to guarantee not only the traceability and explicability of the decisions taken by their algorithms vis-à-vis their customers.

In a sector where trust and transparency are imperatives, the introduction of such requirements is a significant step towards the creation of an ethical and responsible AI.

For banks, this implies a real cultural revolution, prompting them to review their approach to customer service. decision-making and to introduce effective human control processes. In this sense, the AI regulation is not just a regulatory tool; it also encourages banking institutions to redefine their operating model in order to proactively integrate these new requirements.

The AI Act: a catalyst for regulated innovation

In addition to its binding nature, the AI Regulation is also intended to encourage innovation, particularly through the introduction of sandboxes regulatory. These experimental spaces, which enable banks to test their AI solutions under regulatory control. They represent a valuable opportunity for the banking sector, making it possible to reconcile technological development with legal compliance.

For banking institutions, these sandboxes could provide a secure framework for experimenting with AI applications such as automated banking services, algorithms for personalising offerings and predictive risk management tools, while ensuring that these technologies comply with security, transparency and consumer rights protection requirements.

Striking a new balance: innovation, safety and compliance

The AI regulation presents banks with a major challenge: successfully integrating AI into their processes while complying with enhanced legal obligations. They will have to strike a delicate balance between reducing the risks associated with AI and promoting technological innovation, which is essential to their competitiveness.

This regulation does more than simply regulate the use of AI; it imposes a vision of AI at the service of humans, in which the protection of citizens' fundamental rights and the guarantee of greater transparency in automated decisions become strategic priorities. As a result, financial institutions will not only have to comply with a demanding new legal framework, but also demonstrate their ability to use AI as an ethical lever of digital transformationto promote confidence and financial inclusion.

Conclusion

The AI Act represents a decisive step in the regulation of AI technologies in Europe, and particularly for the banking sector. As well as providing a regulatory framework to manage the risks associated with the use of AI, it also paves the way for changes in the practices and processes of banking institutions, encouraging them to incorporate stricter standards in terms of transparency, responsibility and respect for fundamental rights.

However, if this regulation is to have a truly positive impact, it must be implemented in a way that is consistent with existing banking legislation, while ensuring that it does not hold back the technological innovation that is vital to banks' competitiveness in a globalised environment.

The next stages in its deployment should aim to clarify the grey areas and ensure a harmonious application, conducive to the ethical and responsible use of artificial intelligence in the banking sector.