The AI Act (Artificial Intelligence Act) is a European regulation adopted on 21 May 2024 and published in the Official Journal of the EU on 12 July 2024, aimed at regulating the development and use of artificial intelligence within the European Union.. This text establishes harmonised rules for the placing on the market, putting into service and use of AI systems in the EU..

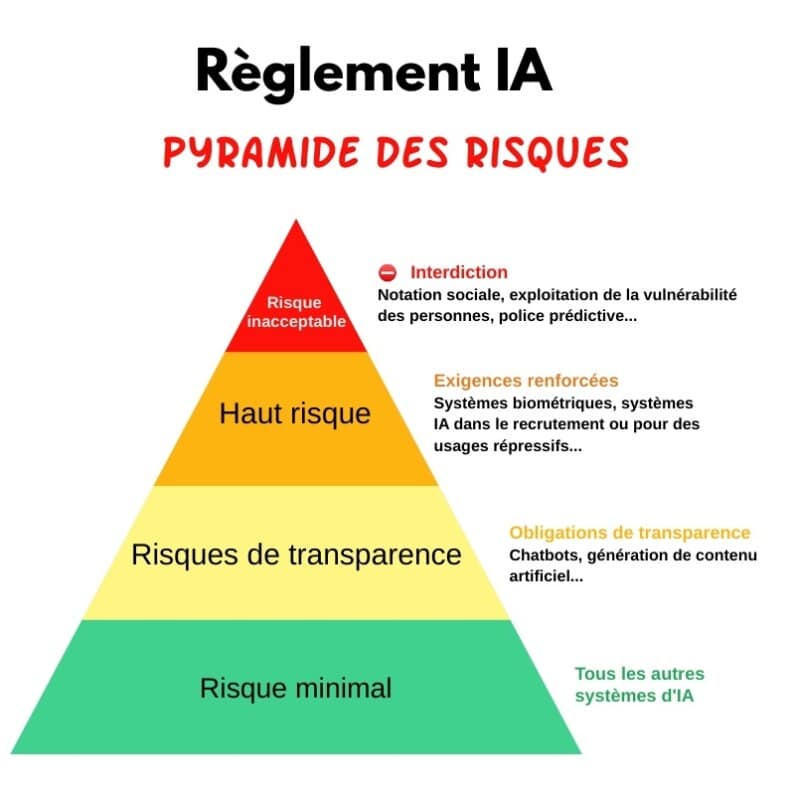

The AI Act classifies AI applications into four levels of risk:

- Unacceptable risk: prohibited practices such as unconscious handling or the exploitation of vulnerabilities.

- High risk: applications subject to specific legal requirements.

- Low risk: applications subject to transparency requirements.

- Minimal risk: largely unregulated applications.

AI Act risk pyramid - ©Alexandre SALQUE/ORSYS le mag

The main objectives of the AI Act are :

- Guaranteeing the safety and respect for fundamental rights of AI systems placed on the market.

- Promoting human-centred, trustworthy AI.

- Facilitating investment and innovation in AI, particularly for SMEs.

- Harmonising regulations within the EU to improve the functioning of the internal market.

The AI Act will be implemented gradually, with key dates such as 2 February 2025 for the ban certain AI practices (unacceptable risks in red on the pyramid), and 2 August 2026 for the general application of the regulation.

To find out more, read the article :